Artificial intelligence is evolving at breakneck speed. Hardware is the fuel behind this acceleration. At the center of this race are three giants: Apple, NVIDIA, and Intel. Each is betting on different strategies to drive the next generation of AI.

Apple, true to its philosophy of seamless integration, has perfected an artificial brain that lives in our pockets and on our desktops. NVIDIA has built veritable cathedrals of silicon, designed to power the most ambitious generative models ever created. Intel, meanwhile, has chosen to democratize AI, bringing it from supercomputers to office PCs.

This is not simply a competition for technical specifications. It’s a race to define the digital experience in the next chapter of technological history.

How AI Chips Work (In a Nutshell)

AI chips are designed to perform billions—or even trillions—of small calculations per second. These calculations power tasks like voice recognition, image generation, and recommendation algorithms.

Unlike traditional CPUs, AI chips use specialized cores (like GPUs or NPUs) to handle matrix operations and parallel processing more efficiently. The result is faster AI with lower power consumption.

Make sure to check this out | AI for Beginners: How to Start Using AI in Your Daily Life

Apple: The Whispered Revolution

The Apple M4 represents the culmination of Apple’s vision for how AI should integrate into our daily lives: invisibly, respectfully, and deeply personal.

This on-device AI philosophy not only protects privacy but eliminates the latency associated with cloud-based services. When Siri recognizes your voice in a noisy room, or when your photos are magically organized by people and places without leaving your device, it’s the M4’s Neural Engine working silently.

Apple’s vision expands with the “Project ACDC”, which implements its chip technology in data centers to power more advanced generative AI services, maintaining the balance between cloud capabilities and local privacy.

NVIDIA: Unleashed Power

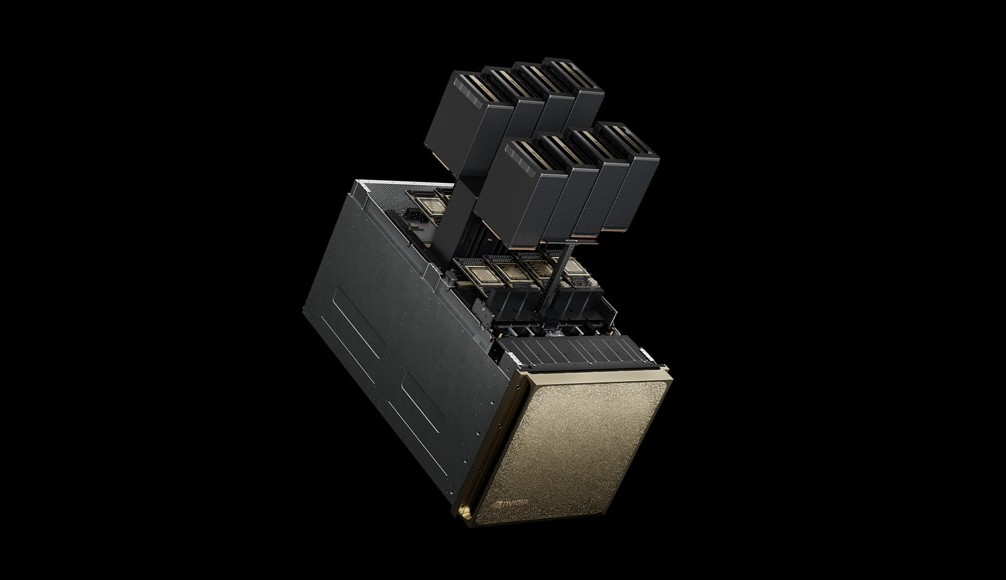

NVIDIA embodies raw, transformative power. Its Blackwell architecture, materialized in the B100 and B200 GPUs, is designed to train and infer AI models that seemed like science fiction just two years ago.

The practical results are impressive: a single B200 offers up to 15 times the inference speed of the previous generation and allows training of models with up to 10 trillion parameters. It’s no surprise that companies like Meta, OpenAI, and Google have secured priority access to Blackwell for the next generation of their generative models.

Every time you interact with ChatGPT, Midjourney, or similar systems, you’re experiencing the power of NVIDIA GPUs. But their impact goes beyond this: from advanced medical diagnostics to complex climate simulations, these GPUs are driving scientific research that was previously impossible to conduct.

NVIDIA hasn’t forgotten advanced edge computing. Its DRIVE Thor system brings this power to autonomous vehicles and robots, allowing generative AI to function directly in these complex devices.

Don’t miss the AI Productivity Kit: 15 Essential Tools for 2025

Intel: The Democracy of Intelligence

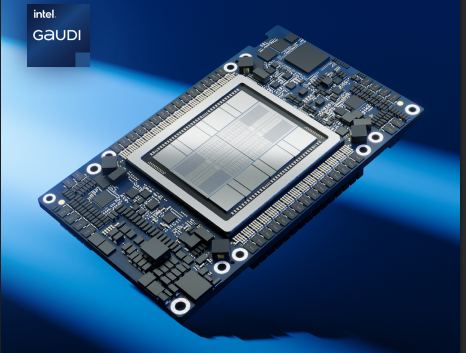

Intel opted for a diversified strategy, bringing AI capabilities to all computational levels, from servers to personal devices. The Gaudi 3 accelerator represents Intel’s bid to compete directly with NVIDIA GPUs in large-scale AI training.

In parallel, Intel has strengthened its Xeon CPUs for data centers with the “Sierra Forest / Granite Rapids” strategy, introducing two complementary processor types: those optimized for density and those focused on performance. This allows companies of all sizes to incorporate AI capabilities into their existing infrastructures.

On the personal device front, Intel has brought AI acceleration to the PC market with its Core Ultra family, which integrates a dedicated Neural Processing Unit (NPU). This strategy has given rise to the concept of the “AI PC,” where functions such as intelligent assistants, local content generation, and integrated copilots are accelerated directly in the PC hardware.

The Real Impact: Beyond the Numbers

Behind the technical specifications, what’s truly revolutionary is how these advances are transforming our digital experience:

With Apple

AI becomes personal and discreet. Your device learns your preferences, anticipates your needs, and improves your productivity without compromising your privacy. From automatic photo enhancement to instant voice note transcription, AI integrates so naturally into the experience that you barely notice its presence.

With NVIDIA

The limits of what’s possible expand exponentially. It’s AI as a transformative force on an industrial scale.

- Generative chatbots: understand complex contexts and generate nuanced responses.

- Medical diagnostic systems: detect pathologies that the human eye would miss.

- Digital creators: generate photorealistic images and videos from simple text descriptions.

With Intel

The democratization of AI means these capabilities reach more people and organizations:

- Small businesses can train custom models without costly infrastructure.

- Home users enjoy virtual assistants that work without constant connection to the cloud.

- Students learn with adaptive educational tools that run on conventional computers.

Explore this article AI Tools for Creativity in 2025: Create Faster, Think Bigger

A Revealing Comparison: Philosophies Translated to Hardware

This table reflects how these tech giants are materializing their AI visions through the most advanced hardware of 2025:

| Company | Product and Approach | Key Specifications | Impact on End User |

| Apple | M4: Personal AI on devices | 16-core Neural Engine (38 TOPS); 10-core CPU (4P+6E); 10-core GPU; 28B transistors at 3nm; unified architecture | Fluid, private AI experiences without cloud dependency; sensitive data protection; long battery life with always-on AI |

| NVIDIA | Blackwell B200: Generative AI in data centers | 18 petaFLOPS (FP4); 9 petaFLOPS (FP8); 4.5 petaFLOPS (FP16); 2.2 petaFLOPS (TF32); 192GB HBM3e at 8TB/s; 208B transistors; NVLink 1.8TB/s | Massive generative models; advances in chatbots, multimedia creation, and virtual assistants; complex scientific applications; infrastructure for “AI factories” |

| NVIDIA | DRIVE Thor: Autonomous AI | 1,000 TOPS (INT8/FP8); approx. 2 petaFLOPS in low precision; MIG for workload isolation; ASIL-D support | Vehicles and robots with autonomous decision-making; real-time content generation; embedded systems with generative capabilities |

| Intel | Gaudi 3: Accessible generative AI | 1.8 petaFLOPS (FP8/BF16); 128GB HBM2e at 3.7TB/s; 64 tensor cores; 24×200Gbps Ethernet connectivity | Training and inference of large models at lower cost; open alternative for enterprise clouds; greater accessibility for startups and research centers |

| Intel | Xeon 6th Gen: Enterprise AI | Sierra Forest: 144 E-cores; Granite Rapids: 128 P-cores; improved AMX with FP16 support; up to 500MB L3 cache | Servers that combine traditional workloads with AI; sensitive data processing locally; efficient hybrid infrastructures; scalable AI microservices |

| Intel | Core Ultra: AI on PC | NPU 13-45 TOPS depending on model; up to 99 TOPS combined in premium versions; hybrid architecture with P and E cores | The “AI PC”: local personal assistants; multimedia creation without cloud dependency; enhanced video conferencing; private data processing on device |

The Confluence of Divergent Paths

Apple, NVIDIA, and Intel are redefining what computing means in the AI era, each from its unique but complementary perspective. Together, these three seemingly divergent paths are converging to create an ecosystem where AI is not just an emerging technology but a transformative force that is already redefining our relationship with digital.

From the chip powering your iPhone to the supercomputers training the world’s most sophisticated models, this silent revolution is just beginning to reveal its true potential.

The battle for AI hardware in 2025 is not just a technological competition; it’s a vision of how artificial intelligence will shape our immediate future. And if these advances are any indication, that future promises to be as astonishing as it is transformative.